Online Object Representations

with Contrastive Learning

Abstract

We propose a self-supervised approach for learning representations of objects from monocular videos and demonstrate it is particularly useful in situated settings such as robotics. The main contributions of this paper are:

- a self-supervised objective trained with contrastive learning that can discover and disentangle object attributes from video without using any labels;

- we leverage object self-supervision for online adaptation: the longer our online model looks at objects in a video, the lower the object identification error, while the offline baseline remains with a large fixed error;

- to explore the possibilities of a system entirely free of human supervision, we let a robot collect its own data, train on this data with our self-supervise scheme, and then show the robot can point to objects similar to the one presented in front of it, demonstrating generalization of object attributes.

An interesting and perhaps surprising finding of this approach is that given a limited set of objects, object correspondences will naturally emerge when using contrastive learning without requiring explicit positive pairs. Videos illustrating online object adaptation and robotic pointing are available as supplementary material.

Introduction

One of the biggest challenges in real world robotics is robustness and adaptability to new situations. A robot deployed in the real world is likely to encounter a number of objects it has never seen before. Even if it can identify the class of an object, it may be useful to recognize a particular instance of it. Relying on human supervision in this context is unrealistic. Instead if a robot can self-supervise its understanding of objects, it can adapt to new situations when using online learning. Online self-supervision is key to robustness and adaptability and arguably a prerequisite to real-world deployment. Moreover, removing human supervision has the potential to enable learning richer and less biased continuous representations than those obtained by supervised training and a limited set of discrete labels. Unbiased representations can prove useful in unknown future environments different from the ones seen during supervision, a typical challenge for robotics. Furthermore, the ability to autonomously train to recognize and differentiate previously unseen objects as well as to infer general properties and attributes is an important skill for robotic agents.

In this work we focus on situated settings (i.e. an agent is embedded in an environment), which allows us to use temporal continuity as the basis for self-supervising correspondences between different views of objects. We present a self-supervised method that learns representations to disentangle perceptual and semantic object attributes such as class, function, and color. We automatically acquire training data by capturing videos with a real robot; a robot base moves around a table to capture objects in various arrangements. Assuming a pre-existing objectness detector, we extract objects from random frames of a scene containing the same objects, and let a metric learning system decide how to assign positive and negative pairs of embeddings. Representations that generalize across objects naturally emerge despite not being given groundtruth matches. Unlike previous methods, we abstain from employing additional self-supervisory training signals such as depth or those used for tracking. The only input to the system are monocular videos. This simplifies data collection and allows our embedding to integrate into existing end-to-end learning pipelines. We demonstrate that a trained Object-Contrastive Network (OCN) embedding allows us to reliably identify object instances based on their visual features such as color and shape. Moreover, we show that objects are also organized along their semantic or functional properties. For example, a cup might not only be associated with other cups, but also with other containers like bowls or vases.

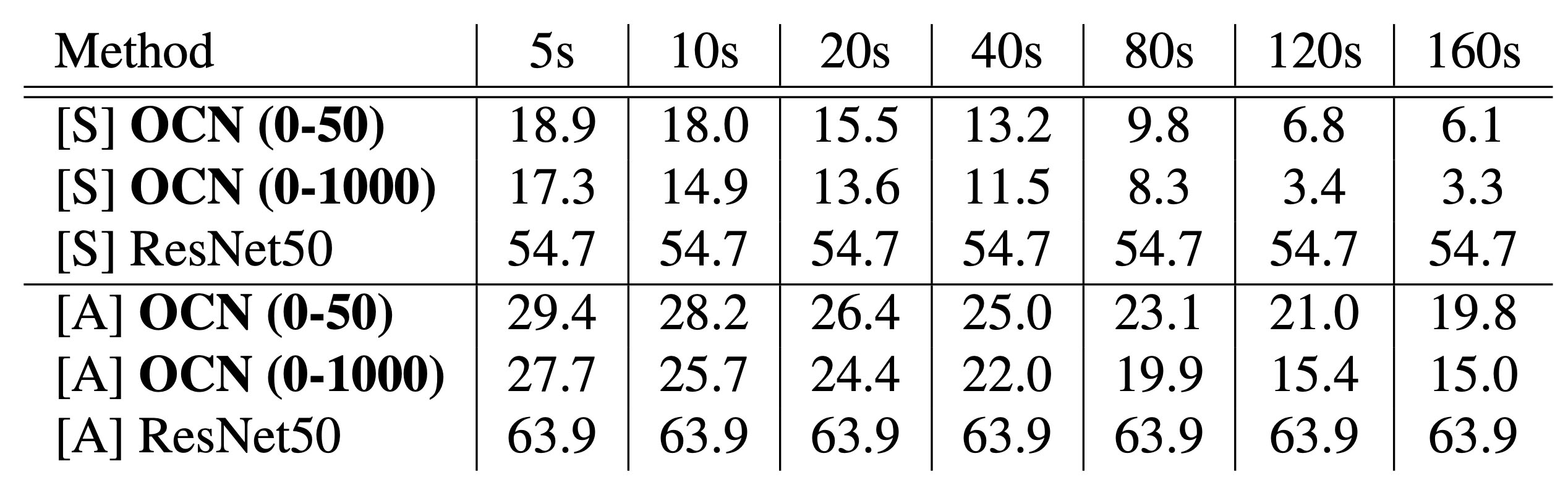

Figure 1 shows the effectiveness of online self-supervision: by training on randomly selected frames of a continuous video sequence (top) OCN can adapt to the present objects and thereby lower the object identification error. While the supervised baseline remains at a constant high error rate (52.4%), OCN converges to a 2.2% error. The graph (bottom) shows the object identification error obtained by training on progressively longer sub-sequences of a 200 seconds video.

The key contributions of this work are:

- a self-supervising objective trained with contrastive learning that can discover and disentangle object attributes from video without using any labels;

- we leverage object self-supervision for online adaptation: the longer our online model looks at objects in a video, the lower the object identification error, while the offline baseline remains with a large fixed error;

- to explore the possibilities of a system entirely free of human supervision: we let a robot collect its own data, then train on this data with our self-supervised training scheme, and show the robot can point to objects similar to the one presented in front of it, demonstrating generalization of object attributes.

Method

We propose a model called Object-Contrastive Network (OCN) trained with a metric learning loss (Figure 2). The approach is very simple:

- extract object bounding boxes using a general off-the-shelf objectness detector

, - train a deep object model on each cropped image extracted from any random pair of frames from the video, using the following training objective: nearest neighbors in the embedding space are pulled together from different frames while being pushed away from the other objects from any frame (using n-pairs loss

). This does not rely on knowing the true correspondence between objects.

The fact that this works at all despite not using any labels might be surprising. One of the main findings of this paper is that given a limited set of objects, object correspondences will naturally emerge when using metric learning. One advantage of self-supervising object representation is that these continuous representations are not biased by or limited to a discrete set of labels determined by human annotators. We show these embeddings discover and disentangle object attributes and generalize to previously unseen environments.

We propose a self-supervised approach to learn object representations for the following reasons: (1) make data collection simple and scalable, (2) increase autonomy in robotics by continuously learning about new objects without assistance, (3) discover continuous representations that are richer and more nuanced than the discrete set of attributes that humans might provide as supervision which may not match future and new environments. All these objectives require a method that can learn about objects and differentiate them without supervision. To bootstrap our learning signal we leverage two assumptions: (1) we are provided with a general objectness model so that we can attend to individual objects in a scene, (2) during an observation sequence the same objects will be present in most frames (this can later be relaxed by using an approximate estimation of ego-motion). Given a video sequence around a scene containing multiple objects, we randomly select two frames and in the sequence and detect the objects present in each image. Let us assume and objects are detected in image and , respectively. Each of the -th and -th cropped object images are embedded in a low dimensional space, organized by a metric learning objective. Unlike traditional methods which rely on human-provided similarity labels to drive metric learning, we use a self-supervised approach to mine synthetic similarity labels.

Objectness Detection: To detect objects, we use Faster-RCNN

Metric Loss for Object Disentanglement: We denote a cropped object image by and compute its embedding based on a convolutional neural network . Note that for simplicity we may omit from while inherits all superscripts and subscripts. Let us consider two pairs of images and that are taken at random from the same contiguous observation sequence. Let us also assume there are and objects detected in and respectively. We denote the -th and -th objects in the images and as and , respectively. We compute the distance matrix . For every embedded anchor , we select a positive embedding with minimum distance as positive: .

Given a batch of (anchor, positive) pairs , the n-pair loss is defined as follows

The loss learns embeddings that identify ground truth (anchor, positive)-pairs from all other (anchor, negative)-pairs in the same batch. It is formulated as a sum of softmax multi-class cross-entropy losses over a batch, encouraging the inner product of each (anchor, positive)-pair (, ) to be larger than all (anchor, negative)-pairs (, ). The final OCN training objective over a sequence is the sum of npairs losses over all pairs of individual frames:

Architecture and Embedding Space: OCN takes a standard ResNet50 architecture until layer global_pool and initializes it with ImageNet pre-trained weights. We then add three additional ResNet convolutional layers and a fully connected layer to produce the final embedding. The network is trained with the n-pairs metric learning loss as discussed in Section "Metric Loss for Object Disentanglement". Our architecture is depicted in Figure 3.

Object-centric Embeding Space: By using multiple views of the same scene and by attending to individual objects, our architecture allows us to differentiate subtle variations of object attributes. Observing the same object across different views facilitates learning invariance to scene-specific properties, such as scale, occlusion, lighting, and background, as each frame exhibits variations of these factors. The network solves the metric loss by representing object-centric attributes, such as shape, function, or color, as these are consistent for (anchor, positive)-pairs, and dissimilar for (anchor, negative)-pairs.

Discussion: One might expect that this approach may only work if it is given an initialization so that matching the same object across multiple frames is more likely than random chance. While ImageNet pretraining certainly helps convergence as shown in Table 6, it is not a requirement to learn meaningful representations as Table 6a. When all weights are random and no labels are provided, what can drive the network to consistently converge to meaningful embeddings? We estimate that the co-occurrence of the following hypotheses drives this convergence: (1) objects often remains visually similar to themselves across multiple viewpoints, (2) limiting the possible object matches within a scene increases the likelihood of a positive match, (3) the low-dimensionality of the embedding space forces the model to generalize by sharing abstract features across objects, (4) the smoothness of embeddings learned with metric learning facilitates convergence when supervision signals are weak, and (5) occasional true-positive matches (even by chance) yield more coherent gradients than false-positive matches which produce inconsistent gradients and dissipate as noise, leading over time to an acceleration of consistent gradients and stronger initial supervision signal.

Experiments

Online Results: we quantitatively evaluate the online adaptation capabilities of our model through the object identification error of entirely novel objects. In Figure 1 we show that a model observing objects for a few minutes from different angles can self-teach to identify them almost perfectly while the offline supervised approach cannot. OCN is trained on the first 5, 10, 20, 40, 80, and 160 seconds of the 200 seconds video, then evaluated on the identification error of the last 40 seconds of the video for each phase. The supervised offline baseline stays at a 52.4% error, while OCN improves down to 2% error after 80s, a 25x error reduction.

Robotic Experiments: here we let a robot collect its own data by looking at a table from multiple angles (Figure 2 and Figure 5). It then trains itself with OCN on that data, and is asked to point to objects similar to the one presented in front of it. Objects can be similar in terms of shape, color or class. If able to perform that task, the robot has learned to distinguish and recognize these attributes entirely on its own, from scratch and by collecting its own data. We find in Table 5 that the robot is able to perform the pointing task with 72% recognition accuracy of 5 classes, and 89% recognition accuracy of the binary is-container attribute.

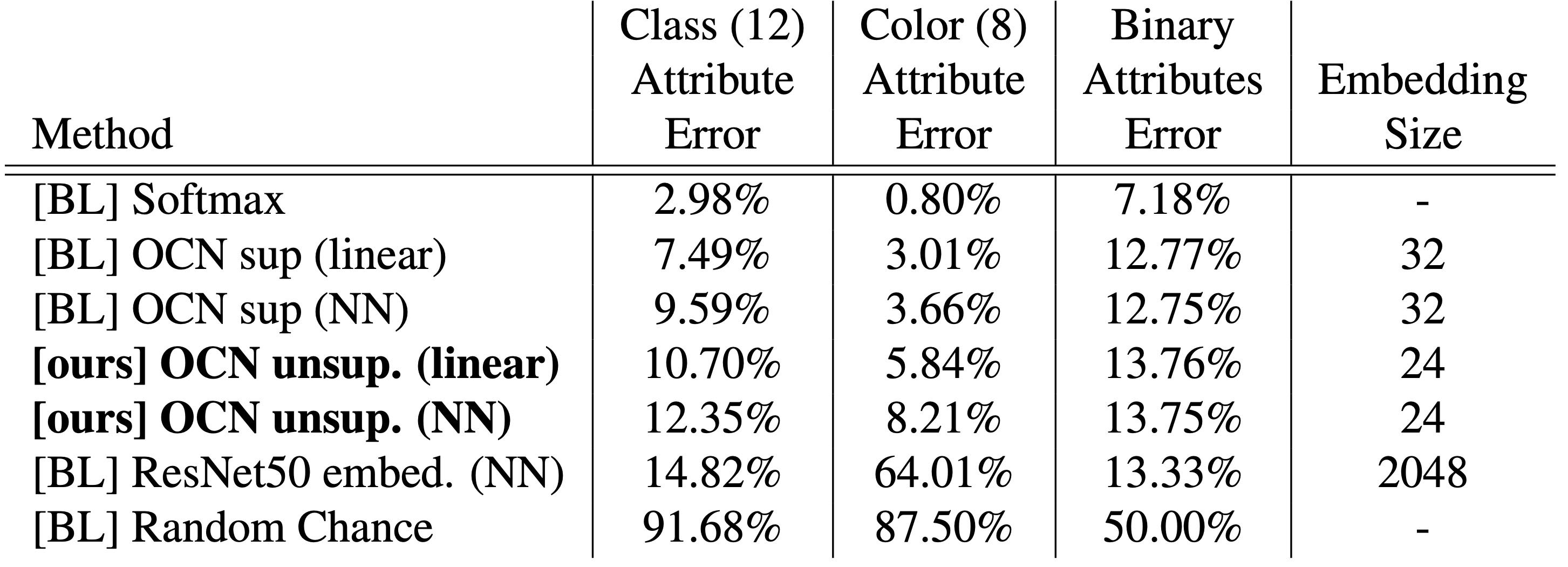

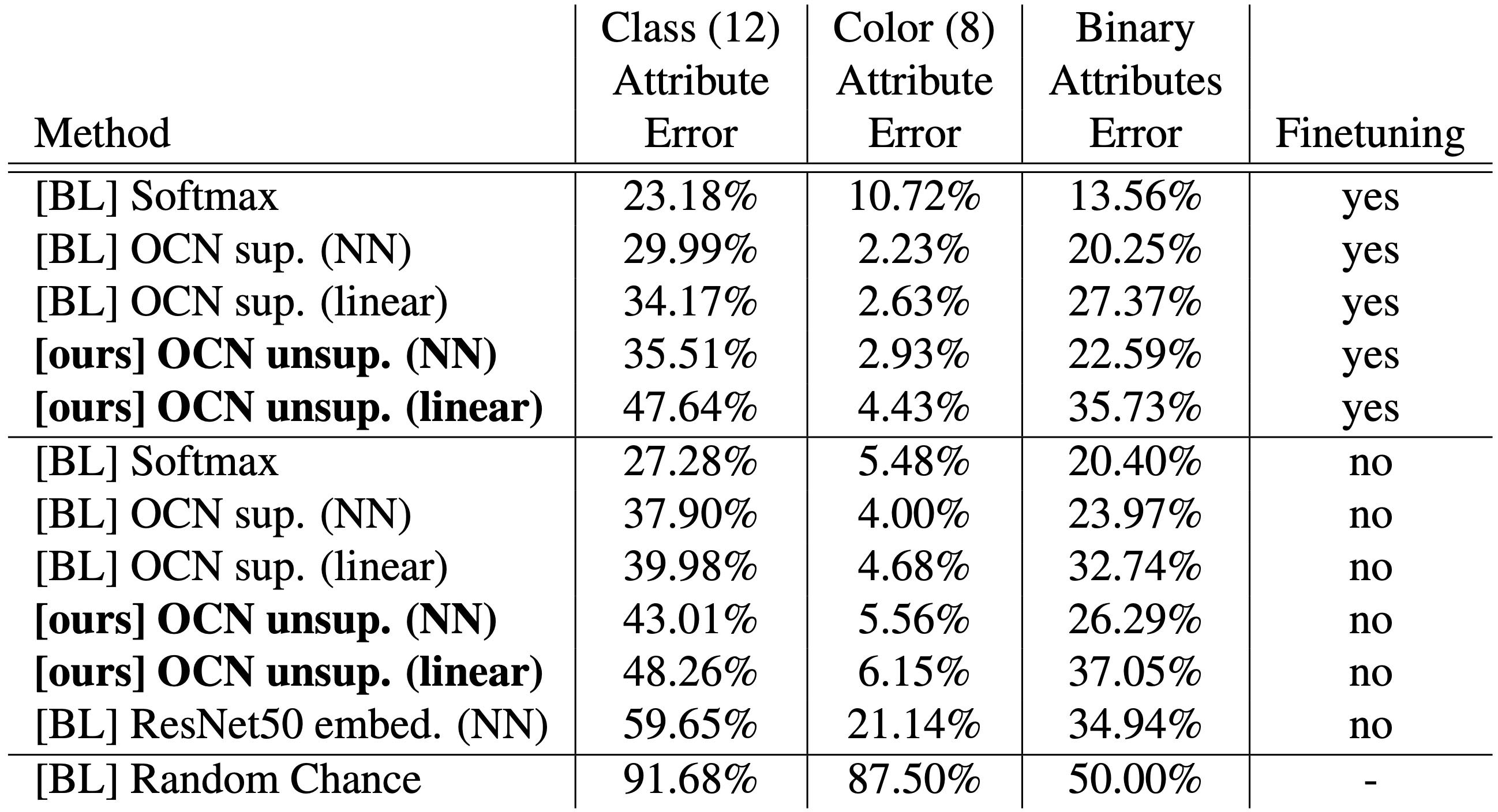

Offline Analysis: to analyze what our model is able to disentangle, we quantitatively evaluate performance on a large-scale synthetic dataset with 12k object models (e.g. Figure 10), as well as on a real dataset collected by a robot and show that our unsupervised object understanding generalizes to previously unseen objects. In Table 6 we find that our self-supervised model closely follows its supervised equivalent baseline when trained with metric learning. As expected the cross-entropy/softmax supervised baseline approach performs best and establishes the error lower bound while the ResNet50 baseline are upper-bound results.

Data Collection and Training

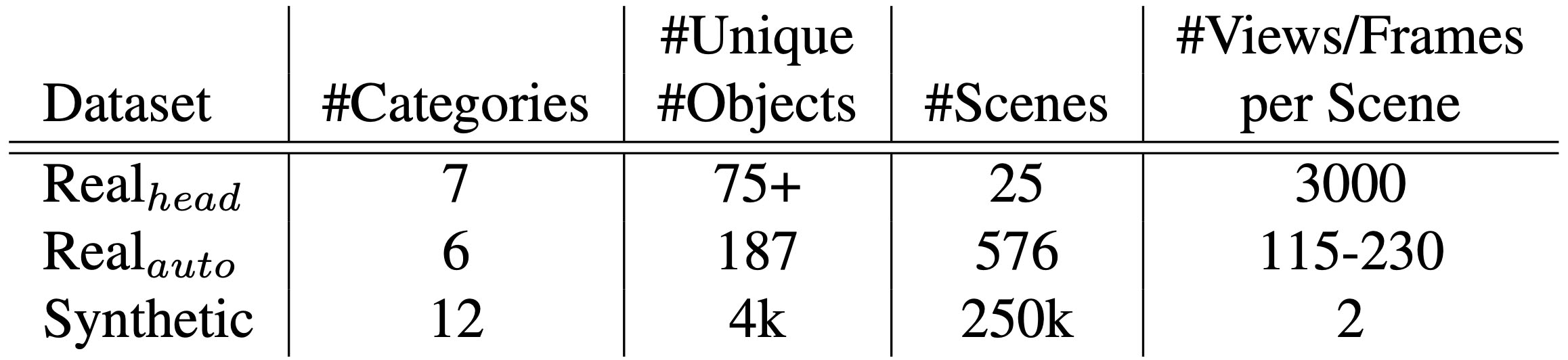

We generated three datasets of real and synthetic objects for our experiments. For the real data we arrange objects in table-top configurations and use frames from continuous camera trajectories. The labeled synthetic data is generated from renderings of 3D objects in a similar configuration. Details about the datasets are reported in Table 1.

Real Data for Online Training: for the online adaptation experiment, we captured videos of table-top object configurations in the 5 environments (categories): kids room, kitchen, living room, office, and work bench (Figures 1, 4, and 6). We show objects common to each environment (e.g. toys for kids room, tools for work bench) and arrange them randomly; we captured 3 videos for each environment and used 75 unique objects. To allow capturing the objects from multiple view points we use a head-mounted camera and interact with the objects (e.g. turning or flipping them). Additionally, we captured 5 videos of more challenging object configurations (referred to as "challenging") with cluttered objects or where objects are not permanently in view. Finally, we selected 5 videos from the Epic-Kitchens

From all these videos we take the first 200 seconds and sample the sequence with 15 FPS to extract 3,000 frames. We then use the first 2,400 frames (160s) for training OCN and the remaining 600 frames (40s) for evaluation. We manually select up to 30 reference objects (those we interacted with) as cropped images for each video in order of their appearance from the beginning of the video (Figure 14). Then we use object detection to find the bounding boxes of these objects in the video sequence and manually correct these boxes (add, delete) in case object detection did not identify an object. This allows us to prevent artifacts of the object detection to interfere with the evaluation of OCN.

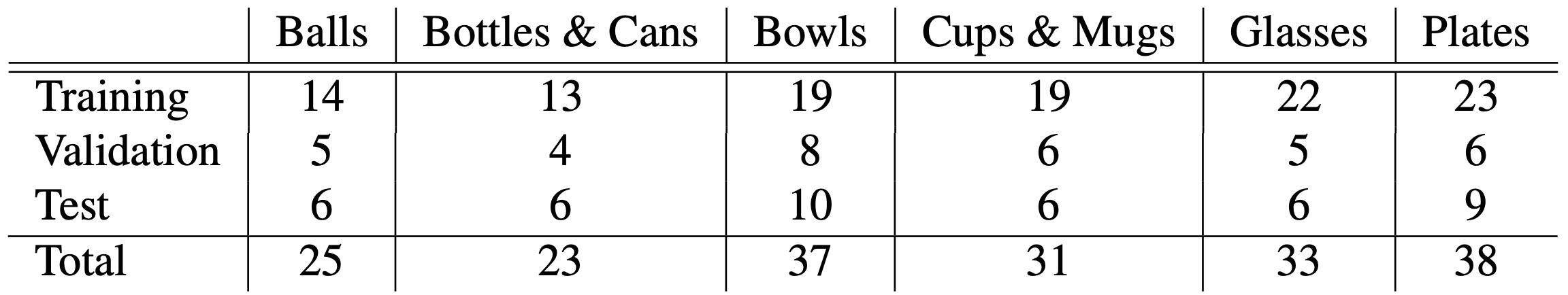

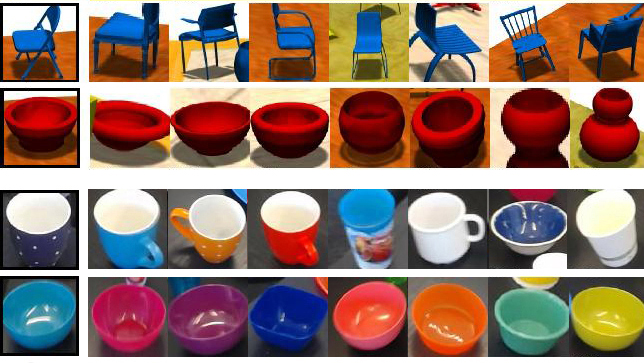

Automatic Real Data Collection: to explore the possibilities of a system entirely free of human supervision we automated the real world data collection by using a mobile robot equipped with an HD camera. For this dataset we use 187 unique object instances spread across six categories including 'balls', 'bottles & cans', 'bowls', 'cups & mugs', 'glasses', and 'plates'. Table 2 provides details about the number of objects in each category and how they are split between training, test, and validation. Note that we distinguish between cups & mugs and glasses categories based on whether it has a handle. Figure 5 shows our entire object dataset.

At each run, we place about 10 objects on the table and then trigger the capturing process by having the robot rotate around the table by 90 degrees. On average 130 images are captured at each run. We select random pairs of frames from each trajectory for training OCN. We performed 345, 109, and 122 runs of data collection for training, test, and validation dataset. In total 43,084 images were captured for OCN training and 15,061 and 16,385 were used for test and validation, respectively.

Synthetic Data Generation: to generate diverse object configurations we use 12 categories (airplane, car, chair, cup, bottle, bowl, guitars, keyboard, lamp, monitor, radio, vase) from ModelNet

We randomly define the number of objects (up to 20) in a scene. Further, we randomly define the positions of the objects and vary their sizes, both so that they do not intersect. Additionally, each object is assigned one of eight predefined colors. We use this setup to generate 100K scenes for training, and 50K scenes for each, validation and testing. For each scene we generate 10 views and select random combination of two views for detecting objects. In total we produced 400K views (200K pairs) for training and 50K views (25K pairs) for each, validation and testing.

Training: OCN is trained based on two views of the same synthetic or real scene. We randomly pick two frames of a video sequence and detect objects to produce two sets of cropped images. The distance matrix (Section "Metric Loss for Object Disentanglement") is constructed based on the individually detected objects for each of the two frames. The object detector was not specifically trained on any of our datasets.

Experimental Results

We evaluated the effectiveness of OCN embeddings on identifying objects through self-supervised online training, a real robotics pointing tasks, and large-scale synthetic data.

Online Object Identification: our self-supervised online training scheme enables to train and to evaluate on unseen objects and scenes. This is of utmost importance for robotic agents to ensure adaptability and robustness in real world scenes. To show the potential of our method for these situations we use OCN embeddings to identify instances of objects across multiple views and over time.

We use sequences of videos showing objects in random configurations in different environments (Section "Real Data for Online Training", Figure 4) and train an OCN on the first 5, 10, 20, 40, 80, and 160 seconds of a 200 seconds video. Our dataset provides object bounding boxes and unique identifiers for each object as well as reference objects and their identifiers. The goal of this experiment is to assign the identifier of a reference object to the matching object detected in a video frame. We evaluate the identification error (ground truth index vs. assigned index) of objects present in the last 40 seconds of each video and for each training phase to then compare our results to a ResNet50 (2048-dimensional vectors) baseline.

We train an OCN for each video individually. Therefore, we only split our dataset into validation and testing data. For the categories kids room, kitchen, living room, office, and work bench we use 2 videos for validation and 1 video for testing; for the categories 'challenging' and epic kitchen we use 3 videos for validation and 2 for testing. We jointly train on the validation videos to find meaningful hyperparameters across the categories and use the same hyperparameters for the test videos.

Figure 6 shows the same video frames of two scenes from our dataset. Objects with wrongly matched indices are shown with a red bounding box, correctly matched objects are shown with random colors. In Figure 7 and Table 3 we report the average error of OCN object identification across our videos compared to the ResNet50 baseline. As the supervised model cannot adapt to unknown objects OCN outperforms this baseline by a large margin. Furthermore, the optimal result among the first 50K training iterations closely follows the overall optimum obtained after 1000K iterations. We report results for 5 categories (kids room, kitchen, living room, office, work bench), that we specifically captured for evaluating OCN and the whole dataset (7 categories). The latter data also shows cluttered objects which are more challenging to detect. To evaluate the degree of how object detection is limiting application of OCN we counted the number of manually added bounding boxes of the evaluation sequences. On average the evaluation sequences of the 5 categories have 5,122 boxes (468 added, 9.13%), while the whole dataset (7 categories) has 5,002 boxes on average (1183 added, 25.94%).

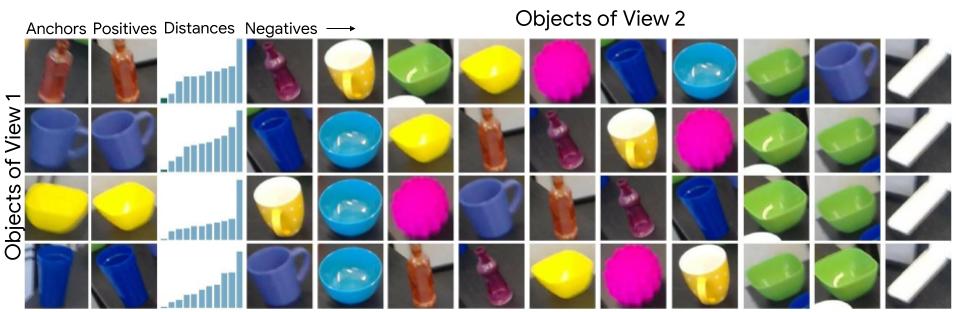

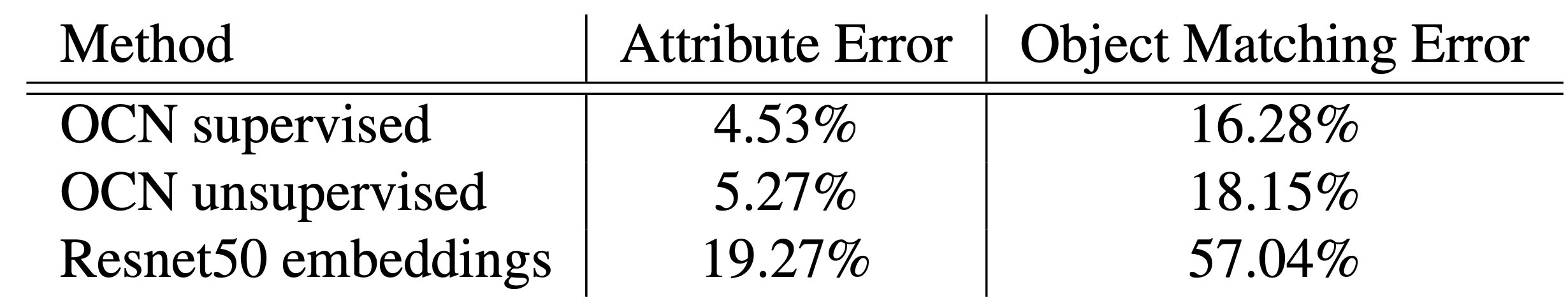

Figure 8 illustrates how objects of one view (anchors) are matched to the objects of another view. We can find the nearest neighbors (positives) in the scene through the OCN embedding space as well as the closest matching objects with descending similarity (negatives). For our synthetic data we report the quality of finding corresponding objects in Table 4 and differentiate between 'attribute errors', that indicate a mismatch of specific attributes (e.g. a blue cup is associated to a red cup), and 'object matching errors', which measure when objects are not of the same instance. An OCN embedding significantly improves detecting object instances across multiple views.

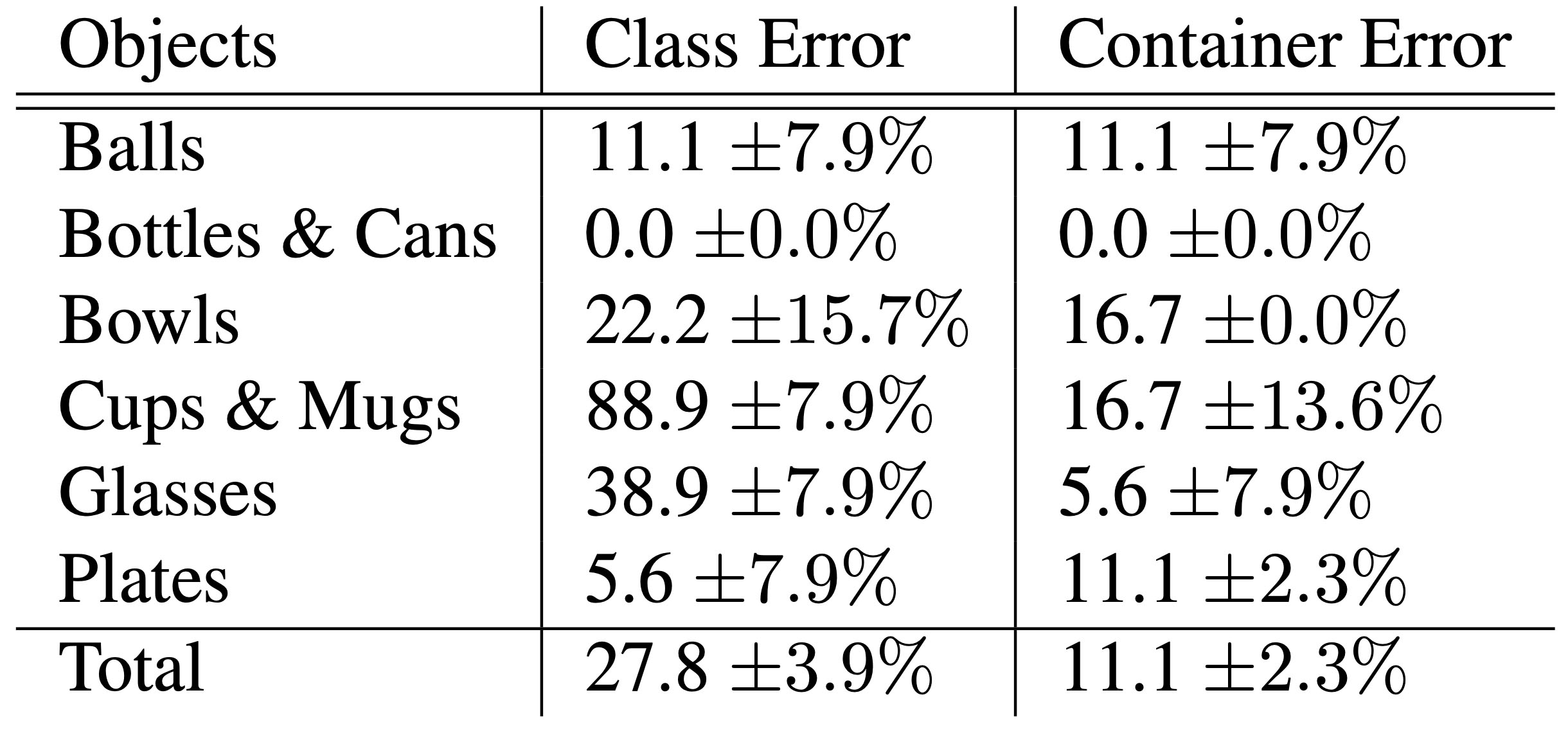

Robot Experiment: to evaluate OCN for real world robotics scenarios we defined a robotics pointing task. The goal of the task is to enable a robot to point to an object that it deems most similar to the object directly in front of it (Figure 9). The objects on the rear table are randomly selected from the object categories (Table 2). We consider two sets of these target objects. The quantitative experiment in Table 5 uses three query objects per category and is ran three times for each combination of query and target objects (3 2 18 = 108 experiments performed).

A quantitative evaluation of OCN performance for this experiment is shown in Table 5. We report on errors related to 'class' and 'container' attributes. While the trained OCN model is performing well on the most categories, it has difficulty on the object classes 'cups & mugs' and 'glasses'. These categories are generally mistaken with the category 'bowls'. As a result the network performs much better in the attribute 'container' since all the three categories 'bowls', 'bottles & cans', and 'glasses' refer to the same attribute.

At the beginning of each experiment the robot captures a snapshot of the scene. We then split the captured image into two images: the upper portion of the image that contains the target object set and the lower portion of the image that only contains the query object. We detect the objects and find the nearest neighbor of the query object in the embedding space to find the closest match.

Object Attribute Classification: one way to evaluate the quality of unsupervised embeddings is to train attribute classifiers on top of the embedding using labeled data. Note however, that this may not entirely reflect the quality of an embedding because it is only measuring a discrete and small number of attributes while an embedding may capture more continuous and larger number of abstract concepts.

Classifiers: we consider two types of classifiers to be applied on top of existing embeddings in this experiment: linear and nearest-neighbor classifiers. The linear classifier consists of a single linear layer going from embedding space to the 1-hot encoding of the target label for each attribute. It is trained with a range of learning rates and the best model is retained for each attribute. The nearest-neighbor classifier consists of embedding an entire 'training' set, and for each embedding of the evaluation set, assigning to it the labels of the nearest sample from the training set. Nearest-neighbor classification is not a perfect approach because it does not necessarily measure generalization as linear classification does and results may vary significantly depending on how many nearest neighbors are available. It is also less subject to data imbalances. We still report this metric to get a sense of its performance because in an unsupervised inference context, the models might be used in a nearest-neighbor fashion).

Baselines: we compare multiple baselines (BL) in Table 6 and Table 6a. The 'Softmax' baseline refers to the model described in Figure 3, i.e. the exact same architecture as for OCN except that the model is trained with a supervised cross-entropy/softmax loss. The 'ResNet50' baseline refers to using the unmodified outputs of the ResNet50 model

Results: we quantitatively evaluate our unsupervised models against supervised baselines on the labeled synthetic datasets (train and test) introduced in Section "Synthetic Data Generation". Note that there is no overlap in object instances between the training and the evaluation set. The first take-away is that unsupervised performance closely follows its supervised baseline when trained with metric learning. As expected the cross-entropy/softmax approach performs best and establishes the error lower bound while the ResNet50 baseline are upper-bound results. In Figure 10 we show results of nearest neighbor objects discovered by OCN.

Related Work

Object discovery from visual media: identifying objects and their attributes has a long history in computer vision and robotics

Many recent approaches exploit the effectiveness of convolutional deep neural networks to detect objects

Some methods not only operate on RGB images but also employ additional signals, such as depth

Unsupervised representation learning: unlike supervised learning techniques, unsupervised methods focus on learning representations directly from data to enable image retrieval

In robotics and other real-world scenarios where agents are often only able obtain sparse signals from their environment, self-learned embeddings can serve as an efficient representation to optimize learning objectives.

Conclusion and Future Work

We introduced a self-supervised objective for object representations able to disentangle object attributes, such as color, shape, and function. We showed this objective can be used in online settings which is particularly useful for robotics to increase robustness and adaptability to unseen objects. We demonstrated a robot is able to discover similarities between objects and pick an object that most resembles one presented to it. In summary, we find that within a single scene with novel objects, the more our model looks at objects, the more it can recognize them and understand their visual attributes, despite never receiving any labels for them.

Current limitations include relying on all objects to be present in all frames of a video. Relaxing this limitation will allow to use the model in unconstrained settings. Additionally, the online training is currently not real-time as we first set out to demonstrate the usefulness of online-learning in non-real-time. Real-time training requires additional engineering that is beyond the scope of this research. Finally, the model currently relies on an off-the-self object detector which might be noisy, an avenue for future research is to back-propagate gradients through the objectness model to improve detection and reduce noise.